Table Of Contents

In this part the original thought was to setup the php-fpm server to be redundant and fix the problem with the db backup not running. It ended up being more of a cleanup of the setup. But I did learn many things about docker in the process.

We will cover the following things

- How to remove a service from the docker swarm

- Setting up a job scheduler in docker to run the backup jobs, for both files and database

Docker cleanup running services

I updated the service specification earlier from having two http1 and http2 services to a single http service with two replicas. I thought that when deploying the new docker-compose.yml file the services inside the swarm would be updated to run only the specified services. This is not the case, the two “old” http1 and http2 services was still running on the swarm taking up resources.

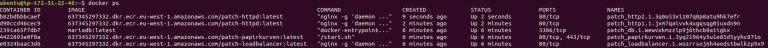

As you can see in the screenshot the first two services are the patch_http1.xxx and patch_http2.xxx from the old deployment file. If we just run docker stop, docker will just restart them because the restart policy is set to restart. To remove them we need to run the commands

docker service rm patch_http1 and docker service rm patch_http2

Then they are removed and stopped. Monitoring running containers and removing the unused ones is a good idea.

Database backup service not running / scheduling docker containers

I noticed that the service I use for database backup was not working correctly, it was not doing the automatic backup that it was supposed to. Only if I attach to the container and ran the backup command manually it did a backup. This needed to be fixed. And i thought this would be a good time to look into a more comprehensive way to schedule jobs on docker. I was hoping for an easy solution to this, but it seems that there are no good and easy ways to schedule jobs in docker. The more I looked for solutions, there more it showed that there are no good ways to do this currently.

The main problem I wanted to solve was to boot and run an image at a scheduled time, so the image just starts and exits when it is done. Right now the two backup services run continuously but are only needed when the do the backup, the rest of the time they just idle, wasting resources.

There are many articles about scheduling jobs it but it is not a smooth process at all. The problem is that docker does not have native support for starting containers at a scheduled time. It is build for keeping long running containers up, not for short term run and exit containers. It seems like there are a few different ways to do it:

- Run a normal old school cron on the docker host with docker run commands

- Have each docker image that needs scheduling include cron and do the scheduling inside each container (the container runs continuously)

- Have a single cron enabled image that is generic and run a container for each job that does the docker run command

- Depend on some external infrastructure that does the scheduling to boot the docker images

I will try to explain the pros and cons with each of the methods in turn. Many of them are also discussed in this docker issue thread where they talk about introducing jobs as a concept in docker.

1. Cron on the docker host

It is probably the easiest of the methods to setup, but it also have some serious drawbacks I think. First off it is not containerized, so the job of scheduling jobs are now different from the rest of the setup. When we scale our setup to multiple docker hosts, we don’t want to have the cron jobs depend on a single node running. But we can’t just add the crontab to all the docker hosts because that would cause the same jobs to run multiple times on different hosts. Which will cause problems and consume unneeded resources. So this option I would not even consider a possibility.

2. Cron inside each docker image

If we move the scheduling responsibility into the same image that handles the job we have improved from 1, since now the scheduling is part of docker and it does allow us to use exactly the same infrastructure that we use for every other service.

The drawback is that now the image has two responsibilities, both the job and the scheduling, this clashes a bit with the idea of docker. Also it requires the container to run continuously consuming resources. This might not be a big problem, the two backup containers I use consume 0,6mb and 6,5mb of ram and 0% cpu when not in use.

In normal cases a docker image runs a single command and if this command crashes the container will exit and docker will find out and restart the image. But with this setup the command monitored is the crond command and not the job. So if the job crashes with a wrong return value docker will never know. So another way to monitor the job is needed.

Depending on how the image implement it, even crond can crash without docker finding out. For example this setup here the command monitored by docker is

CMD cron && tail -f /var/log/cron.log

The problem here is that the cron command runs in the background, and tail is the command monitored, so if cron crashes, docker will just keep seeing that the tail command still runs. So beware of what is monitored. In many cases the cron command actually support a foreground mode, so use it if possible.

3. Single generic cron image that starts other images

As explained here we can setup a docker image that uses the docker api to run other docker images. Now the responsibility is split into two images which is better, but it causes the infrastructure to be a bit more complex, and we still need an image to run continuously to manage the schedule. As I discovered this has some problems with docker swarm mode, explained later.

4. Using external infrastructure

Most of the cloud providers that have native docker support like AWS support some kind of scheduler for running images. For example AWS ECS Scheduler can schedule running tasks on the docker hosts, but it does require that we use their docker swarm system and not our own like I do currently. Many other scheduling systems also exist like, ActiveBatch, Rundeck, Chronos and others. For all of them the infrastructure requirements are a bit much for just running a backup job as I want to currently.

My setup

I think that option 4 where using the scheduling system native for the cloud provider, is actually the best option but it is not possible for me currently so that will have to wait until I port my setup to AWS ECS.

So the next best option is 3. I tried to setup it up like it is explained in the article I linked to. But I ended up with a problem that I was unable to solve. I deploy my services using docker swarm mode in a stack. A stack has it’s own network that is shared between the services in the stack. This network is protected so any other containers running on the same host are not able to communicate with the network. So for the cron scheduler to run the backup containers so they have access to the network, it needs to run it as a service. But the docker images from the article does not support this, it starts the container as a normal docker container, which means that it can’t connect to the stack’s network. So this solution does not work.

So in the end I went with option 2. Both the mysql and file backup docker images I use have build in scheduling capabilities so it works fine for now.

Both of the images has the problem described with monitoring the tail process.

Share

Related Posts

Legal Stuff